Impact

About the company

Role

UX Designer

Contribution

Research, Strategy, Testing & Design

Team

1 Lead UX Designer, 2 PM, 7 Engs

Timeline

Feb 2021 - Jun 2021

Highlights

Watch it in action

There has been a rebrand after, however, the core design survived with minimal changes

Read TL; DR

Problem & Opportunities

Managers could not evaluate agents' call quality performance

Contact Center Managers had no built-in way to evaluate or coach agents within Dialpad. They relied on spreadsheets or paid third-party tools for call quality assessment - creating inconsistent, manual, and siloed processes.

Without structured evaluation workflows, managers couldn’t provide timely feedback or identify trends across their teams. This led to slow ramp-up for new hires, inconsistent service quality, and higher agent turnover, all of which hurt customer satisfaction and operational efficiency.

Call Agents

Problem

Get delayed or inconsistent feedback, slowing ramp-up and often want to rage-resign.

Goals

View feedback immediately based on performance.

Manager/ Reviewers

Problem

QA processes were inconsistent in toolings and across different people.

Goals

Have a fast, flexible way to evaluate agent performance.

With the problem in mind, we asked:

How might we help managers to help managers spend less time scoring and more time coaching - all within Dialpad?

For Business

Differentiate Dialpad by turning coaching into a core feature.

Strengthen the Contact Center offering with native quality evaluation tools.

For Product

Introduce a scalable framework for scorecard creation, grading, and feedback.

Reduce dependency on external vendors and increase stickiness for enterprise customers.

Solutions

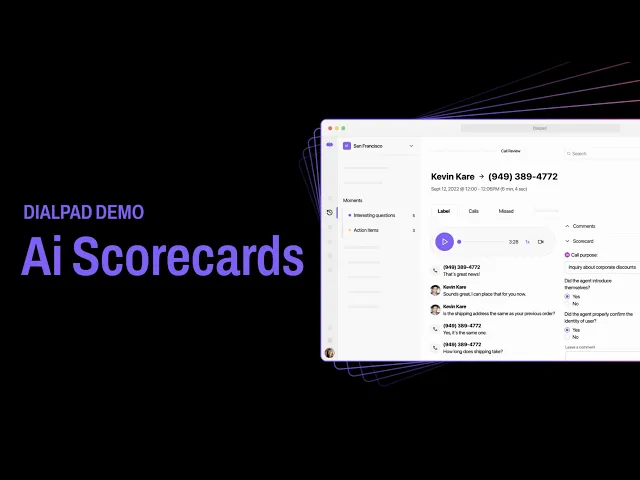

An Integrated Scorecard Platform for Smarter Performance Evaluation

The solution reimagines call evaluation as a connected ecosystem rather than a series of disconnected tools. I designed a platform where managers can build reusable scorecards, assess calls in bulk, and deliver targeted feedback - powered by Dialpad’s voice intelligence. The result is a streamlined coaching experience that scales across teams, eliminates manual overhead, and elevates overall call quality.

The redesigned AI Scorecard transformed how Contact Center Managers evaluate calls, coach agents, and track performance within Dialpad. The system was built to balance flexibility, automation, and clarity, enabling teams to scale their coaching programs without adding operational overhead.

Customizable Scorecard Builder

Managers can create reusable templates tailored to their teams. The builder supports weighted criteria, question types, and categories, making evaluation versatile, consistent and transparent across the organization.

Impact: Reduced setup time and improved coaching consistency across distributed teams.

Streamlined Grading Flow

Managers can quickly grade calls, add notes, and assign feedback, and share calls with peers for collaborative evaluation.

Impact: Increased grading efficiency and more consistent coaching.

Collaborative Coaching & Feedback

Multiple supervisors can review the same call. Inline commenting helps align on quality standards to support consistent coaching language across teams.

Impact: Improved team alignment and faster feedback delivery.

AI-Assisted Suggestions

Dialpad’s voice intelligence analyzed transcripts to auto-suggest answers for some evaluation questions. Reviewers could accept, reject, or edit the recommendation.

Impact: Enhanced accuracy and reduced manual analysis time. Saved time and reduced subjectivity in scoring.

At-a-Glance Performance Dashboard

A central dashboard gives managers visibility into evaluation trends, coaching frequency, and team performance over time. It acts as both a monitoring tool and a coaching guide, surfacing which agents need support and where overall team quality can improve.

Impact: Enabled data-driven coaching decisions and performance tracking at scale.

Research

Uncovering the Gaps in Quality Evaluation

The solution reimagines call evaluation as a connected ecosystem rather than a series of disconnected tools. I designed a platform where managers can build reusable scorecards, assess calls in bulk, and deliver targeted feedback — powered by Dialpad’s voice intelligence. The result is a streamlined coaching experience that scales across teams, eliminates manual overhead, and elevates overall call quality.

The redesigned AI Scorecard transformed how Contact Center Managers evaluate calls, coach agents, and track performance — all natively within Dialpad. The system was built to balance flexibility, automation, and clarity, enabling teams to scale their coaching programs without adding operational overhead.

7 user research interviews were conducted to the managers to understand better about their goals and workflow in assessing quality control.

Key insight: Managers spent more time organizing data than actually coaching — revealing a huge opportunity to streamline and automate their workflows.

Based on research, we reframed the challenge around three design goals:

Scalability – Enable consistent coaching across large, distributed teams.

Efficiency – Reduce manual effort and repetitive setup.

Clarity – Make evaluations intuitive for both novice and experienced managers.

These goals informed our north star: “Help managers spend less time scoring and more time coaching.”

On top of audit to leverage existing pages for access point, we conducted 2 sessions with 15 internal stakeholders, ranging from C-suites, engineers and support agents. We asked them to brainstorm and come up with sketches that would helpful for the users.

In the user interview, grading calls was time-consuming and subjective as managers had to read or listen to transcripts line by line and manually decide how to score each question.

How might we:

How might we use existing voice intelligence to assist in the call grading process?

This is where we identified the opportunity to leverage our Voice Intelingence (Vi) to parse through the summary to identify keywords and sentiment to help managers grade the scorecard.

Design, Iterations & Hurdles

An Integrated Scorecard Platform for Smarter Performance Evaluation

The solution reimagines call evaluation as a connected ecosystem rather than a series of disconnected tools. I designed a platform where managers can build reusable scorecards, assess calls in bulk, and deliver targeted feedback — powered by Dialpad’s voice intelligence. The result is a streamlined coaching experience that scales across teams, eliminates manual overhead, and elevates overall call quality.

The redesigned AI Scorecard transformed how Contact Center Managers evaluate calls, coach agents, and track performance — all natively within Dialpad. The system was built to balance flexibility, automation, and clarity, enabling teams to scale their coaching programs without adding operational overhead.

The scale of the project was much bigger than what the team has anticipated, so we decided to split up the flows to work in parallel to meet the desired deadline.I was tasked to come up with the solutions for1.) Creating a rubric,

2.) Find & Assign calls

3.) Score Calls

Early Iteration

I started with a whitespace-heavy layout where managers created questions one at a time. The intent was to reduce distractions and keep focus on question design.

What we learned

Managers were accustomed to dense dashboards with multiple details in view. The minimalist approach felt too sparse and unfamiliar.

Final Design

We shifted to a compact builder view with side-by-side details, matching managers’ expectations while still keeping the flow structured and approachable.

Early Iteration

I explored integrating grading into the existing call summary page. Low-fidelity sketches tested ways to blend evaluation into transcript views.

Proposed Solution

A refreshed two-column layout gave more real estate to grading. Stakeholders loved the clarity and modern feel.

Trade-off

Engineering flagged risks with rebuilding the transcript page, and many users were already habituated to the existing three-column layout.

Final Decision

We evolved within the three-column pattern — ensuring we could ship on time while maintaining user familiarity.

Mid-way through the project, our PM left the company, and the metrics tracking plan was deprioritized. As a result, much of the quantitative impact we had hoped to capture was not implemented in the MVP.

This was a tough lesson: without clear ownership, even critical success metrics can fall through the cracks. It also reinforced for me how important it is for designers to advocate for metrics early, not just on the UX.

While we didn’t have analytics in the MVP, success would’ve been measured by:

% of managers adopting the tool → signals whether the solution solved real pain

Average time to complete a scorecard → tracks workflow efficiency improvements

Number of evaluations per agent, per month → shows scalability of coaching practices

Accuracy rate of AI-suggested scores → validates whether automation adds real value

Manager satisfaction (CSAT or survey) → captures sentiment around usability and impact

Impact

An Integrated Scorecard Platform for Smarter Performance Evaluation

This project closed a major gap in Dialpad’s Contact Center product. For the first time, managers had a built-in, scalable way to evaluate calls and coach agents, reducing their reliance on external tools and fragmented workflows. By aligning user needs with business strategy and technical feasibility, the AI Scorecard became a foundation for Dialpad’s future coaching and AI initiatives.

Although the initially cut from MVP due to timeline constraints, this feature was:

Rolled out later as a roadmap update

Became one of the most anticipated enhancements

Validated the long-term design direction I had proposed

Core design survived multiple company rebrands with minimal changes

Learnings

Balance Design with Feasibility

I learned that pushing for bold redesigns (like the two-column grading layout) can win excitement from stakeholders, but if engineering can’t support it in time, the project risks slipping. Sometimes the best path forward is finding creative ways to evolve existing patterns so the team can deliver value without delay.

Familiarity matters as much as novelty

My early designs leaned into “clean slate” layouts, but user testing showed managers preferred dense, detail-rich screens they were used to. I realized that adopting familiar mental models often creates faster adoption than introducing something radically new. Innovation needs to meet users where they are.

Prototype beyond MVP

Even when features like semantic analysis suggestions didn’t make the first release, showing exploratory visions built excitement, helped secure stakeholder buy-in, and influenced future roadmap decisions. Designing beyond scope isn’t wasted effort, it sets the direction for what would come next.

Collaboration is critical to outcomes

This project required constant negotiation with PMs, QA managers, engineers, and even sales. I learned that involving each group early - especially engineering - surfaces constraints sooner and avoids rework. The trade-offs we made were easier to align on because everyone felt heard.